Why we shouldn't plagiarize with AI! 👟👟👟

Today I was having a discussion with a disgruntled HIVE user who was accused, by the HELIOS @hive.defender team, of a mild case of paraphrasing plagiarism.

Initially, we decided not to flag the user and told him it was only a warning right off the bat, however, just the sight of our simple warning triggered him to go on a barrage of repetitive question spree resulting in a slew of insults & ending in a metaphor involving a donkey and me.

Basically, this user couldn't wrap his head around how an ai could write his personal experiences, when the answer is simply by "paraphrasing". That's what this whole incident stemmed from in the first place. We were simply warning the user that if he's using GPT or another gpt-based paraphrasing tool to rewrite his personal point of view, then he should definitely be aware that it could trigger, @hivewatchers, or @ecency too.

At the end of the day when cooler heads should have prevailed, instead it was head butting time.

Since I had become irritated by the users inept understanding of the word paraphrasing, & partly because I had to spend so much time replying back and forth in between insulting appeals which were much ado about nothing, I decided to send him a courtesy flag. Now at least he could have something to complain about.

The moral to this story is that if you get a simple warning about something, whether or not you are right or wrong, it might be better to take the moral high road and "Let it roll off your shoulder!" Getting triggered about a flag or a warning here on hive and escalating the situation in a true troll fashion will rarely ever solve anything and only dig a deeper grave for you to sleep in.

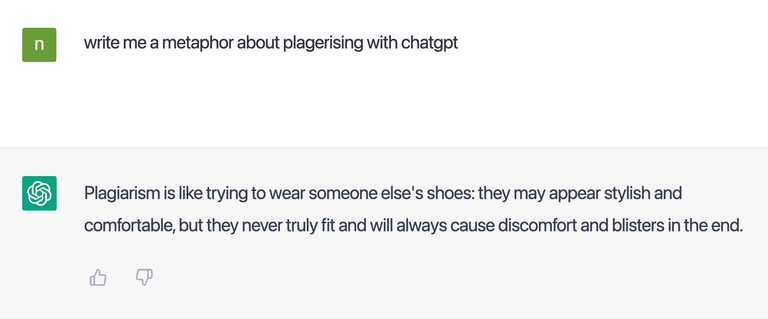

This whole situation should have been avoidable, but one good thing I got out of it was this metaphor that I posted as my thumbnail to this post. I had chat gpt create a metaphor to encapsulate this idea about plagiarising with chat gpt since the antagonist of this story is so fond of metaphors.

Plagiarism is like trying to wear someone else's shoes: they may appear stylish and comfortable, but they never truly fit and will always cause discomfort and blisters in the end.

-- Chat GPT

Chat GPT chose an interesting metaphor. I would rather walk in my own shoes.

$WINE

Congratulations, @theguruasia You Successfully Shared 0.300 WINEX With @coininstant.

You Earned 0.300 WINEX As Curation Reward.

You Utilized 3/4 Successful Calls.

Contact Us : WINEX Token Discord Channel

WINEX Current Market Price : 0.157

Swap Your Hive <=> Swap.Hive With Industry Lowest Fee (0.1%) : Click This Link

Read Latest Updates Or Contact Us

Wow! AI is very impressive these days. Soon they would have self aware and a ‘mind’ of their own. They might not like something so bad that they could start trolling those people forever! Scary!

OMG AI troll bot armies could spell DOOM for HIVE one day! This is the perfect playground/battleground for something like that to flourish. HIVE's going to need some kind of HUMAN-only 3d CAPTCHA proving mechanism built into the blockchain soon I reckon.

This is rather tough as I wouldn’t be able to tell if I was interacting with a bot! Some people could fall in love with romance bot scammers!

!BEER

View or trade

BEER.Hey @coininstant, here is a little bit of

BEERfrom @eii for you. Enjoy it!Did you know that <a href='https://dcity.io/cityyou can use BEER at dCity game to buy cards to rule the world.

The problem is: how do we recognise posts that paraphrase a text produced by chatgpt? Also, technically, paraphrasing text could be said not to be 100% AI-produced. This is quite a problem!

Yes, indeed it is tricky. ChatGPT 3.5 underlines the lines that were paraphrased according to its matching algorithm. Also, we don't flag anything unless it is way more than 50% paraphrased according to the openai detector. It's a fine line we navigate, however, the benefit of the doubt will be given in all the borderline cases. We're only going after the 80-90% matches for the most part.

I understand, so anyway many could circumvent the mechanism by paraphrasing at least 50 per cent of the published article, in which case the benefit of the doubt is rightly given.

The thing I wonder is if a person is a repeat offender? That is, if it happens once for a single article, you turn a blind eye. But if a person publishes 50% paraphrased content every day, you take action right? In that case you can't have the benefit of the doubt all the time :)

Exactly, we take it on a case-by-case basis. We do examine older writings look at their history and compare it to new ones. Depending if there is a drastic change or not can play into the equation too. Once someone is warned then the next 50% is worse, and a string of them would mean they have not learned a lesson. Anyway, two 50%s add up to 100%, so we won't turn a blind eye to someone gaming the system like that. We always follow up after a first flag or a warning. I think half AI is just as bad personally.